The PPU thread

the PPU thread. This is a deep rabbit hole, and is inspired by 8-bit Guy's frustration that for his Commander x16 project, he's gotta have an FPGA for generating video, or some other chip many many times more powerful than the 6502 based CPU at his machine's core.

So, what are the other options? In sum:

Cheap, Period Authentic, HDMI compatible. Pick two.

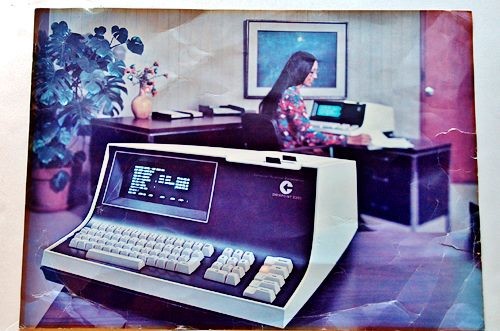

So it's the 1980's and you're designing a microcomputer. To save on production costs, you want the video to be generated by a standard part. Popular choices in the early 1980's are

- texas instruments TMS9918 used in the MSX 1 and others

- Motorola 6845 which basically only generates the timing signals and creates a binary address counter which could be routed to a VRAM module for fetching pixels to be drawn. Like half a GPU.

- the Mullard SAA5050 is a curious case , being present in the BBC micro along with the 6845, is a chip designed to generate on screen graphics for the teletext standard

- as near as i can tell, beyond these three, the market for “standard” ppu parts was pretty stagnant. Sega, and the MSX standard which used the texas instruments parts, got yamaha to make enhanced versions of the chip for their master system, megadrive/genesis, and msx2. consequently, yamaha’s v9938 chips were sorta available

Yamaha is still in the business of making and selling PPU's in the same sprite based/pattern based architectures. There's a list of their products on their website here.

Graphic Controller - Electronic Devices - Yamaha Corporation

a pic chip that is allegedly capable of generating 320x240x8bpp vga signal with clut for palette cycling that could possibly be used to decode my weird codec i am working on. 24FJ256DA210

the color maxamite 2 uses something called an ART ACCELLERATOR. (such an exciting name for a graphics chip), this is a core on its system-on-a-chip adjacent to its ARM core that boils down to hardware accelleration for pixel oriented 2D-rect DMA and pixel format conversion. blitting, in other words.

the RA8876 is a mass produced 2D “graphics engine” that outouts RGB values and is often paired with a CH7035B which can output to HDMI. these are available as an hmdi arduino shield and can be driven by a quite low powered device. but, while these are somewhat “standard” parts, they are many times more powerful than even an arduino.

the f18a is an FPGA implementation of the texas instruments 9918

https://dnotq.io/f18a/f18a.html

so it’s 2020, and most people don’t have a CRT based television or vga monitor laying around. they’re actually rare antiques, likely never to be made ever again.

but the 1980s micros had an ineffable hacker feeling, an ethic, where somehow, you were able to coax a video signal that could drive a CRT out of a circuit. the CPU that you’ve attached is just incidental.

so is, THAT the feeling to capture? if so, how and with what?

so there’s 5 main options for retro-hacking a ppudisplay system, depending on your ethicaestheticcommercialproject goals

- full old school generating CRT signals on the CRT that you still have for some reason. (standard pong project kit)

- generating vga signals that can be interpeted by some LCD panels (achievable, but the flat panel vga is maybe cheating?)

- not worrying about cpu power purism and just generating hdmi signals using whatever is cheaply available (gameduino, maxamite)

- focus on directly driving a flatpanel display, sidestepping the wasteful signal conversion steps. e.g. the ili9341 panels that are packed with a driver chip and their own mcu which accepts a commandstream over a serial connection. or you could go hardcore and design your own driver. i guess.

- invent an entirely new kind of display. spinning LED strip. ferrofluid. high speed inkplotter. a clickity clacker. the rad 3d pin displays from xmen. sky’s the limit.

normally the goal here is doing something about this nagging feeling that hobby electronics just aren’t as accessible to newcomers as it was in say, the 1970s or 1980s, partly because the devices that are normalised now have at a minimum an inscruyibly complex silicon chip controlling them with no observable parts.

this may be a problem. but it might, in fact, not be a problem at all. after all, we weren’t particularly concerned about 6502’s having no visible vacuum tubes.

there’s arguments to be made about capitalism and globalism, and resiliance to supply chain failure, sure.

silicon crystals are still gonna need 6 months to grow in a million dollar machine. the industrial infrustructure to make this stuff actually from scratch needs expensive investments. so if we wanna smash the state and seize the means of production DIY, decide how far we’re willing to go with that realistically. me? i think simple fantasy consoles and arm chips running linux are just fine.

as far as video is concerned, quite a lot of the IP on old 1990’s consoles has expired, so there’s nothing stopping cheap clones of any popular 1980’s or 1990’s console video chip from being mass manufactured other than needing some kind of market to justify the expense.

what concerns me though is that past the snes/genesis era level video hardware, there’s not a lot of stuff in the public domain for say, even psx or n64 level graphics.

is that even a problem if you’re not an open source foss purist?

in my view, the problem that really needs solving is stable platforms upon which to build software. it’s possible to preserve things from until around 2005 because 1. the platforms they were written for are no longer changing. 2. they tended not to (heavily) depend on running servers. we can emulate this stuff. we can build clones. we can even write homebrew. we have much less confidence that the software we’re writing now has any longevity. even the awesome first wave of 2007 iphone apps

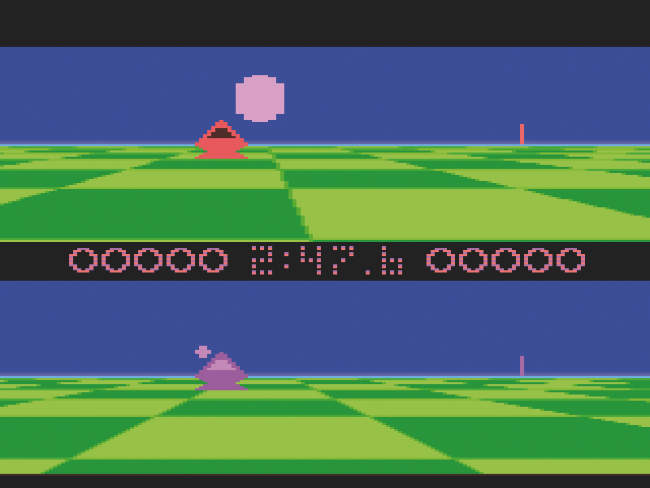

the general instruments "ay-3-8900-1" was the ppu on the intellivision. it had a resolution of 160x96 pixels composed of 8x8 tiles, and had 8 8x8 sprites, and 16 colors (total).

the Atari ANTIC (1978) was a custom graphics "coprocessor" that would execute machine code in a graphics specific instruction set called a "display list". much like modern GPUs. It was used in the atari 5200 and Atari ST. It had hardware smooth scrolling, It sort of only output pixel data, and a seperate chip would generate the video signal. I don't really follow how this works or what modes it has available.

ah the vic-ii and the commodore 64. I''ve never been a fan of the look of the c64 palette, but plenty enough people have nostalgia for it. according to wikipedia, they made this gpu to cherrypick the best features of the ANTIC and the TMS9918.

it's got

- 320 × 200 pixels

- 40 × 25 characters text

- 16 colors

- 8 sprites

- collision detectiion

- raster interrupts

- petscii inside

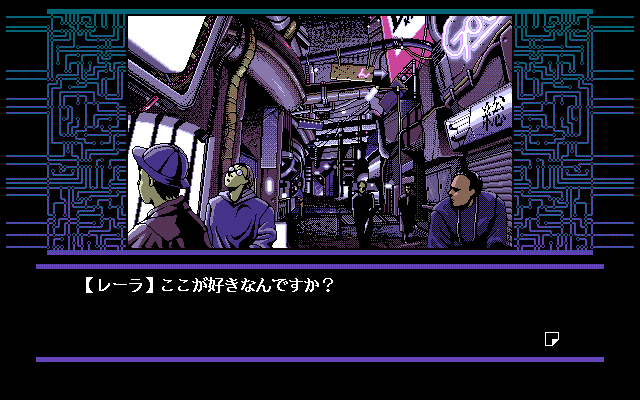

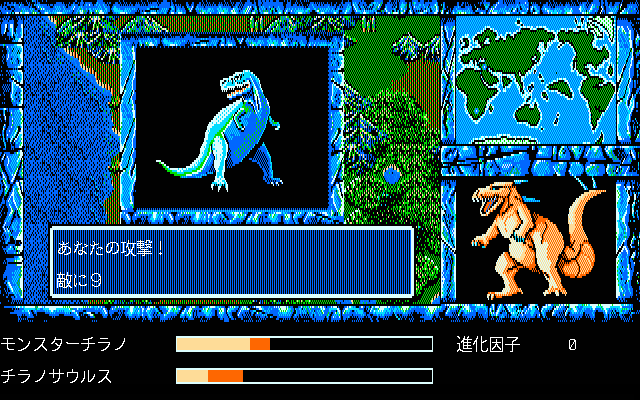

the NEC µPD7220 first released on the PC-9801 in japan in 1982.

japanese writing had some unique requirements, so wow, they really powered it up. This thing had hardware accelleration for things like lines and rectangles and what not. So of course it got used for lots and lots of porn games.

It was such an impressive chip if I'm reading this right, direct X7's 2D api is based on its features. Checking "2d accelleration" switches this chip on. Maybe.

A lot of what we think of as a "PPU" and its various features are really just elaborate work arounds for the relative expense of memory- particularly in the case of the only PPU that is actually called a "PPU", the NES's graphics chip.

By contrast, a Mac 128k doesn't seem to have anything like this. Instead it just has enough memory to store each pixel as a bit, twice over, and the memory is fast enough to simply read each pixel as the CRT raster scans. Everything else is done in software.

the big "trick" of the NES and C64 PPUs comes from terminal emulators and so called "Character Generator" circuits. These amber glass typewriter emulators could get away with as little as 256 bytes of ram (25x80 characters), which would be used as lookups for character graphics in the much cheaper ROM chip. the "video shift register", which would count up appropriate addresses in the rom as the raster scans. So you get to simulate a high res display without needing a lot of expensive RAM

The really clever thing the NES did in particular, which no other games console did, was include two rom chips on the standard cartridges, one of which would get wired directly to the video circuitry as the character generator rom. This meant the NES console itself could get away with a similarly minuscule amount of RAM, and get "arcade" level graphics by including different "fonts" with each game. As a bonus, the glitches you would get from a badly connected cartridge were RAD AS HELL

So the thing to note though, is that the NEC µPD7220 and the PC-9801 released YEARS before the NES and the MAC, and could do 16 color graphics on a 1024x1024 pixel framebuffer, with hardware accellerated drawing, far superior to anything else at the time, for many years. But, what makes the Mac and the NES clever is their economy. The mac still had a luxurious amount of video ram, but managed with just software. the NES used a clever trick to make it seem like it had more power than it did.

the common thread is constraints on the BOM, the cost of the device, directly informed by the cost of parts at the time of their production, not a nostalgic purity. and it's the creativity that came out of those constraints that hooks us, and gives these devices their character. We can copy it, but it wouldn't be authentic to our times. The authentic question for us would be: How would you build the cheapest possible games console now? what clever hacks could you use?

okay, here’s a weird idea.

what if a game engine were deeigned to generate an h.264 (or some other video) stream directly? lots of cheap hardware has decoders for video built in. how efficiently could a program simply spit out i-frames?

here’s a thing i didn’t know about explicitly: blitter chips

these were specific bits of hardware for speeding up the copying of rectangles of various pixel formats with transparency into a framebuffer.

this is distinct from a PPU with hardware sprite support, as those graphics would be generated on the fly, as the CRT beam scanned, using clever memory pointer tricks.

a blitter is more of a brute force, huge chunk of memory based technique. GPU precursors.

so the hd63484 came out around 1984, as a sort of riff on the NEC chip. it can in theory go up to 4096x4096 pixels at 1bpp, and was allgedly used in cad and desktop publishing equiprment. I can't find any examples of its output, but here's an implementation of it in MAME for some reason. What arcade games used it?

MAMEHub/hd63484.h at master · MisterTea/MAMEHub · GitHub

just learned 8bit guy is an open carry no masker transphobic racist dickhead so probably the actual reason he isn’t satisfied with PPU solutions that actually work in 2020 is he’s an idiot.

Smiffi Surgubben Wears a Mask @grumpysmiffy@aus.social @zensaiyuki I remember the word, if nothing more.

Smiffi Surgubben Wears a Mask @grumpysmiffy@aus.social @zensaiyuki I remember the word, if nothing more.

@grumpysmiffy “blit” routines are common in low level graphics APIs like SDL. the thing about those though is the documentation remains intentionally vague about exactly how they’re implemented, as they may ultimately translate down to just a dumb software copy with no hardware accelleration at all.

@grumpysmiffy the “news” i wasn’t aware of was that these routines ever existed as a specific and discrete peice of hardware, superate from the mysterious “video card” epoxy blob

Smiffi Surgubben Wears a Mask @grumpysmiffy@aus.social @zensaiyuki I remember seeing it touted as an exciting feature in some newly released machine, in a computer magazine*, back in the late 80's, l think.

Smiffi Surgubben Wears a Mask @grumpysmiffy@aus.social @zensaiyuki I remember seeing it touted as an exciting feature in some newly released machine, in a computer magazine*, back in the late 80's, l think.

*I'd forgotten that computer magazines were even ever a thing, let alone that I used to buy them.

Robin Frousheger @froosh@aus.social @zensaiyuki the amiga blitter was my first introduction to co-processing and risc

Robin Frousheger @froosh@aus.social @zensaiyuki the amiga blitter was my first introduction to co-processing and risc

Spindley Q Frog @SpindleyQ@mastodon.social @zensaiyuki a quick search through the source reveals 2 drivers: "shanghai" and "taitob"

Spindley Q Frog @SpindleyQ@mastodon.social @zensaiyuki a quick search through the source reveals 2 drivers: "shanghai" and "taitob"

games supported by those drivers:

MAMEHub/shanghai.c at f770145881b4f0c601f0bf3d0cdd4177de1f6f00 · MisterTea/MAMEHub · GitHub

MAMEHub/taito_b.c at f770145881b4f0c601f0bf3d0cdd4177de1f6f00 · MisterTea/MAMEHub · GitHub

@SpindleyQ I did manage to find a couple of those.

the complication with the taito_b board, is that it uses the hitachi chip in the emulation, but I can find little documentation of it in anything about the taito_b board. that aside, the taito_b board had a custom video chip which either had the hitachi inside it somewhere, or did its own thing which is.. .a lot of things.

Looking at the games, it's inclear what the hitachi is doing…..

@SpindleyQ I guess what I'm saying is, it's a chip that accellerates drawing shape primitives into a frame buffer which isn't exactly groundbreaking in 2020, but in the interests of history, it's hard to find a specific example of the chip actually doing this.

@SpindleyQ but, on the other hand, a slightly newer chip that does much of the same stuff, the HD63705 is in a whole bunch of flat shaded 3d polygonal games on a board called Namco System 21, including Hard Drivin'. so, according to some of the sources I've read, it's the second ever "GPU".

(the first being the NEC chip it's a more powerful clone of)

@SpindleyQ there is one concrete place i was able to find the original hitachi chip, where it's probably working its business in full. the Anritsu MS2601A oscillioscope.

not exactly the most impressive graphical thing, but it sure is drawin' lots of lines.

Spindley Q Frog @SpindleyQ@mastodon.social @zensaiyuki yeah, you'd have to, like, set a breakpoint in the chip code and run some games, or set up a custom MAME build that comments out the integration of that chip and see what graphical glitches result

Spindley Q Frog @SpindleyQ@mastodon.social @zensaiyuki yeah, you'd have to, like, set a breakpoint in the chip code and run some games, or set up a custom MAME build that comments out the integration of that chip and see what graphical glitches result

@SpindleyQ And… according to another article I read, apparently texas intruments cloned it into more of a sysntem on a chip complete sorta videochip system and shopped it around to nintendo and sega in 1988 to do a full 3D console---- in 1988.